Introduction

In this article, I would like to describe a problem we faced with our T-Cup product. The whole application is hosted on AWS. We use the AWS API gateway to serve the REST API along with lambda functions that handle requests. Code of functions is written in in node.js. Lambda functions also use the Amazon RDS system to store data. As a database engine, we use Postgresql as we find it suitable for our purpose.

Recently, we decided to test our product and see how many requests we could handle without any visible performance flaws. It turned out that after some decent (but not too heavy) load, lambdas stopped working with an error that indicated that the maximum number of database connections was reached. We needed to find a proper solution since our planned load is clearly much larger than our solution could handle.

We started with some background to understand the problem we have faced better.

AWS Lambda with Sequelize

How the connection pool works with sequelize

In the beginning, we need to better understand how the connection pool works. In general, the opening connection is a resource-consuming operation. Each open connection requires about 10MB of RAM and takes a decent amount of time. So it's common practice to create a connection, leave it open, and put it into a connection pool that can be used later on by the application and - what is most important - can be reused. It can really save a lot of RAM and other server resources.

In sequelize, you can specify the configuration of your pool like this. Take a look at the most important configuration parts.

- min - the minimum number of connection in the pool (default: 0)

- max - the maximum number of connection in the pool (default: 5)

- idle - maximum time, that a connection can be idle before being released. (default: 10000 ms)

- evict - time interval, after which sequelize-pool will remove idle connections.(default 1000 ms)

So after the connection is removed from the pool, it can be safely closed by the database engine.

Database connections on Amazon RDS

On Amazon RDS, each database instance has a maximum number of connections that can be opened at the same time. It can be configured as a database parameter. By default, the value is calculated based on the memory available on the machine where the database is hosted. The formula is

LEAST({DBInstanceClassMemory/9531392},5000)

It may look like it's an artificial boundary, but as soon as the number of connections is closer to that number, the performance drops dramatically, so increasing the number of connections above this calculated value is not an option.

Database connection in Postgresql can be in one of the following states:

- active - This is currently running queries.

- idle - this is where you have opened a connection to the DB (most frameworks do this and maintain a pool of them), but nothing is happening.

- idle in transactions - this is where your app has run a BEGIN, but it's now waiting somewhere in a transaction and not doing any work.

According to that number of available connections for RDS instance looks like this:

td2.micro - 66td2.small - 150td2.medium - 312td2.large - 648m3.xlarge - 1237

Now we have covered the whole theory so we can see how it works with AWS lambda.

Exhausting database connections from Postgresql

In monolith applications, we have a straightforward situation. One application manages the database connection, and we can easily configure the number of connections in the pool. In distributed systems, it can be more complicated. However, in microservice architecture with a shared database, the number of working instances is rather small, so it's quite easy to set a proper number of connections in the pool of each microservice.

The situation becomes quite interesting when it comes to lambdas. Usually, we need to configure the connection pool in the code of lambdas. Lambdas are executed in separate containers, which autoscale once more and more requests need to be handled. So for each lambda container, a connection pool is created. With the wrong configuration, it can lead to exhaust database connections really fast.

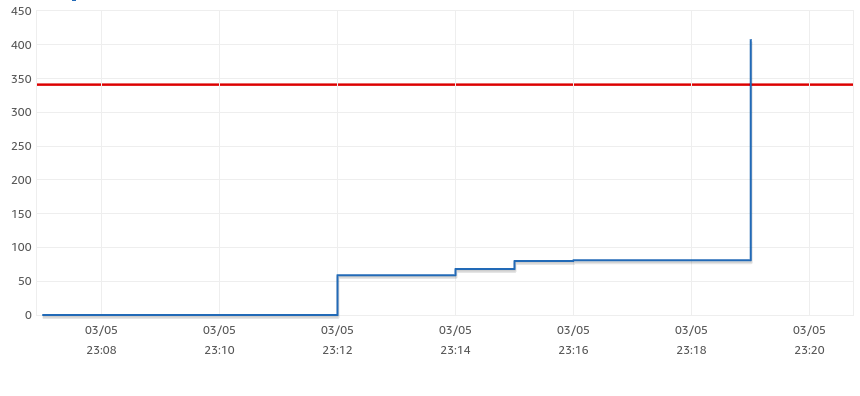

Let's analyze the results of our tests. We use jMeter to generate the desired amount of traffic. We started with 10000 users that generated about 100000 requests in 10 minutes, so it's ca. 167 requests per second. In the test results, I will not show how much time each request took - we will focus on the number of open connections. We use the RDS td2.medium instance for the sake of tests.

We started with the default sequelize configuration that is:

pool: { min: 0, max: 5, idle: 10000, }

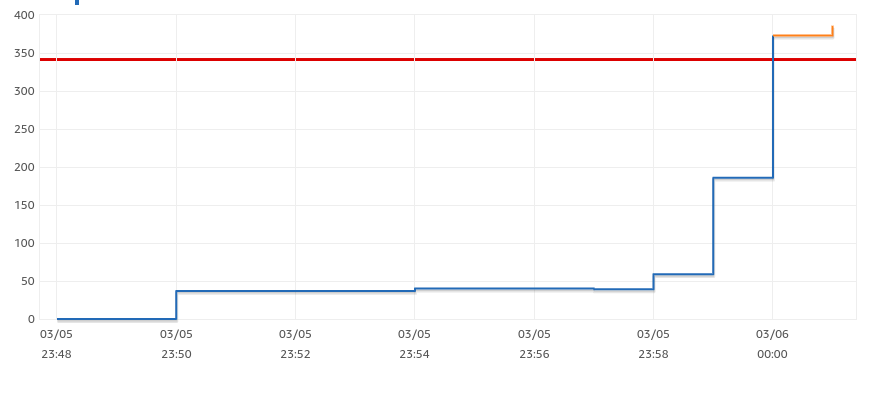

So it opens 5 new database connections for each lambda. We end up exceeding the number of connections, even for quite a small amount of users. See the chart below:

You can see that the number of connections was growing steadily but at one moment where lambda containers autoscale and each created 5 new connections and suddenly we went over the allowed number of connections.

Configuration changes to fix exhausting issues

Reduce the number of connections

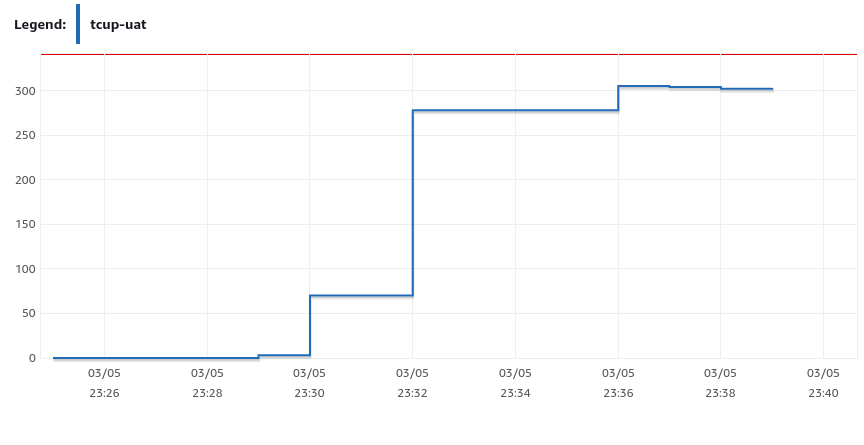

There is a big spike - it means that lambdas need additional containers. Each of them requires 5 new connections, and they were created at the same time. We decided to reduce the number of maximum opened connections to 1. We were wondering how it affects the performance of requests, but the performance was even better than with the previous configuration. The explanation of that is quite straightforward. Lambdas are executed in parallel, however for one container execution is sequential. So even if several users share the same container, they are handled one by one.

So our first action was to reduce the numbers of max connections to 1, so our configuration looked like this:

pool: { min: 0, max: 1, idle: 10000, }

Then the connections chart looks like this. It's much better than the previous, and the test eventually passed, but it almost reached the max number of connections. We still needed to find some other improvements to reduce the number of open connections.

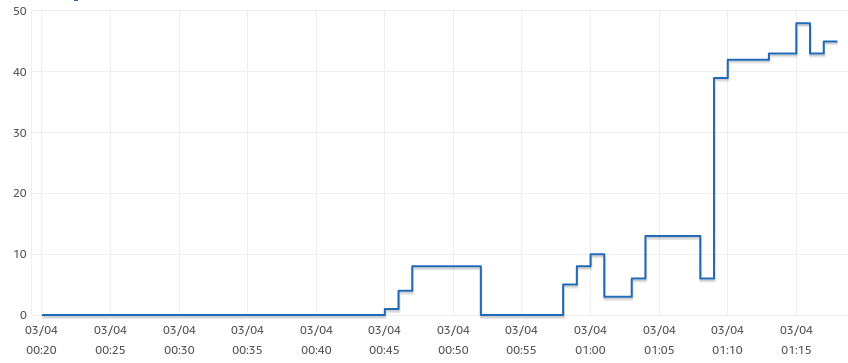

Reduce idle time

We took a closer look at the idle parameters that are set on our tests. Idle determines how long a connection can leave in the pool before releasing it from the pool. It's set to 10 seconds by default. For our usage, it's definitely too much, lambda timeout is 6 seconds in our case, and on average, each lambda is executed in ~300 ms. So having 10 seconds means that some connections were opened after lambda finished its job. Also, they remain open even when lambda does not handle any requests. We reduced the idle time to 1 second and ran a test suite.

Our configuration looks like this:

pool: { min: 0, max: 1, idle: 1000, }

And the connection chart looks like this:

This means that we have, at most, only 48 open connections. This is far below the max limit, which is 312 for that database instance. So we were able to handle 167 requests per second without any problem.

However, we were wondering if reducing idle time further would result in better performance, but it turns out that we cannot lower idle connections since once the connection is released from the pool, it isn't automatically removed by the database engine. If lambda execution time is lower than the idle time it may result in opening a new connection to replace that one removed from the pool. Let's see how applications behave when we lower the idle parameter to 100ms.

As you can see, we end up with database connection exhaustion. The number of connections was quite steady at the beginning but grew dramatically as more and more requests came in.

Eventually, we left idle for 1 second, and we can easily handle ca. 167 requests per second on t2.medium RDS instance with great performance.

Change the RDS Database instance

As the number of requests grows and grows, we will eventually need to use a bigger database instance like t2.large. However, keep in mind that upgrading databases without proper configuration is not an option, and even a powerful instance will not handle a larger amount of users without changing the configuration. We have tested a wrong configuration on M3.xlarge machine, and we suffer from connection exhaustion issues even for default configuration as well.

Summary

At Solid Studio Software House, our goal was to handle over 1000 requests per second without a significant performance drop. Due to the configuration changes described above, we managed to handle them with an average response time ca. 300 ms and RDS t2.xlarge instance. Serverless architecture requires a different perspective, but with proper configuration, it can perform really well without high costs.

Solidstudio develops a fleet of software products designed to facilitate EV charging network management. See more about our CPO platform and eMSP app.